AI + Customer Research = ??

AI can augment and speed up human-centered research. Here are a few ways.

More and more, people are asking me about AI’s role in customer research. Often, this boils down to the same big question:

“Can talking to Claude replace talking to people?”

And, honestly, I feel you on this. I know that a huge percentage of my readers are either busy founders, introverted engineers who like to avoid talking to people, or, often, both.

So I totally get where people are coming from with that question and why the prospect of being able to avoid talking to people might be exciting.

But…

I don’t think AI can ever replace talking to people. Human beings, and human organizations, are far too weird, idiosyncratic, illogical, emotional, and inconsistent for an LLM to ever fully capture. (Call me a romantic, but I personally find that idiosynchronicity beautiful. Engineer founders probably find it maddening.)

Yet I do think AI can be a useful asset in making it easier to do customer research, and in turn lower the barriers to doing good customer research that still has human beings at the center, both the subject and interviewer.

For example, when Deploy Empathy came out in 2021, AI was already improving customer research in the form of automated transcripts. When I started interviewing, we’d have to wait about a week to get a transcript back from a human transcriber. By contrast, as part of writing this article, I uploaded the audio of an interview with a reader to Otter.ai, and it transcribed it with about 95% accuracy in five minutes. From a week to five minutes! That’s incredible.

This is where I think AI can be really useful: in decreasing the cycle time of customer research and, in turn, give companies insights significantly faster. And the more companies see the transformative power of insights from customer research, the more they want it. That means AI is likely to ultimately be a good thing for customer research.

LLMs have accelerated a lot in the past five years. As part of the research for the expanded/revised edition of Deploy Empathy I’m planing to release later this year, I’ve been talking to people to understand how they’re using AI in their customer research.

Here are a few.

Have AI adapt your interview scripts to your product

The interview scripts in Deploy Empathy are intended to be adapted to your product and what you’re looking to learn.

Chaz Yoon, founder of Milled.com, a tool that helps e-commerce companies keep tabs on their competitors’ email marketing, wanted to understand why people were coming to their site and what led them to upgrade plans. A few months ago, when he was planning out a research project, he uploaded my book and his company’s landing page to Claude, and asking it to recommend a script based on the book.

It then provided him an adapted script that he took back to his team, they tweaked, and ran with.

Here’s a modified transcript of Chaz’s conversation with Claude:

Chaz: I'm going to be conducting customer research interviews. I want to incorporate an approach outlined by the book Deploy Empathy. Pretend you are the interviewer. […]

Consider the business as recently launched, with some traction but looking to get to the next level. We are reaching out to people who are on the free plan, are using the product, but have not yet upgraded. We're trying to understand how Milled fits into their work and what we can be doing better.

Claude: This sounds like a perfect case for both Switch interviews (to understand why people started using Milled) and understanding what might drive upgrades to paid plans. I would recommend: 1. For recruiting: Since these are existing free users, you can use the email templates from Chapter 23. You'll want to emphasize that you're interested in learning about how they use Milled in their work, not trying to sell them anything. 2. For the interview script: I'd recommend starting with the Long-time Customer Interview script (Chapter 41) but modified to focus on: - Their overall process for tracking and analyzing marketing emails - What they were doing before they found Milled - How they currently use Milled in their workflow - What other tools they use alongside Milled - What would make Milled more valuable to them 3. Key areas to probe during interviews: - The frequency with which they need to analyze marketing emails - The pain points in their current process - Whether they're currently paying for any other tools in this space - Who else in their organization is involved in this work

Chaz: I would like you to just give me questions that I can use in the interview. The product is a search engine, so they are already using search queries to find emails they are looking for. The target users are email marketers, so I want to understand why they are searching for the keywords they use.

Claude:

Opening:

"Thank you for taking the time to talk with me today. I'm interested in learning more about how you use Milled in your work. Before we start, do you have any questions for me?"

Core Questions:

- "Could you tell me about what you do in your role?"

- "Walk me through the last time you searched for marketing emails - what were you looking for and why?"

- "What led you to look up those particular emails?"

- "Before you found Milled, how did you gather this kind of information?"

- "How often do you find yourself needing to look up competitor emails?"

- "Can you tell me about any other tools you use alongside Milled?"

- "What's the most challenging part about tracking competitor marketing?"

- "How do you use what you find in the emails to inform your work?"

- "Who else in your organization uses this kind of competitive intelligence?"

Going Deeper:

- "When you find something interesting in an email, what do you do next?"

- "What makes a search particularly useful for you?"

- "Can you walk me through how this information fits into your broader marketing planning?"

The "Reaching for the Door" Question:

- "Thank you so much for sharing all of this with me today. Is there anything else you think I should know about how you use Milled or what would make it more valuable for you?"

Overall, not bad.

Claude flipped on which script to use (Switch or Long-Term), and the wording of the questions leans towards the stilted, but overall, not terrible, especially as a first draft to take to your team. I was happy to see that key questions about process and pain/frequency were included.

For example, these questions are good, as they prompt the person being interviewed to imagine specific situations:

"Walk me through the last time you searched for marketing emails - what were you looking for and why?"

"Can you walk me through how this information fits into your broader marketing planning?"

However, there are a few areas where the questions are not good. This is one of the most glaring problems:

- "Thank you so much for sharing all of this with me today. Is there anything else you think I should know about how you use Milled or what would make it more valuable for you?"

Never ask people how you could make a product more valuable to them. If there are ways, it will come through. Don’t ask customers to brainstorm for you—it’s their role to have the problems, and your role to figure out how your organization might be able to solve them given your unique capabilities and strategy.

Don’t constrain the Reaching for the Door question to “how you use Milled”—it’s best to let it drop off after “you think I should know?” There might be something more valuable they can tell you about the steps before or after using their product, and that won’t come through if you’ve asked them to focus on your product.

Hmm, how to correct Claude on this, and stop it from suggesting questions like that in the future? More thoughts on that towards the bottom of this issue. But let’s return to using it as an asset to reduce the time it takes to do research.

But overall, I’d say Claude did a decent job here. Like the transcription tools, there are areas that need to be cleaned up. But more importantly, it saved Chaz and his team, by his estimate, 1-2 hours—and that’s 1-2 additional hours they were able to spend talking to users. That’s a win in my book.

Claude as your interviewing coach?

One of my favorite parts about doing customer interviews is that you always find a nugget of gold that you weren’t expecting.

That’s exactly what happened when I was talking to Chaz a few weeks ago. I’d gone into the call with him hoping to hear about how it helped him adapt interview scripts, which I did. What I didn’t expect is that the interview ended up exposing a hole in the book: guidance on how to self-assess and become a better interviewer.

After completing the interviews, Chaz uploaded the transcripts to Claude, and it gave him strengths, weaknesses, and areas for improvement:

Chaz: Attached is an AI summary of the meeting. How did we do?

Claude: Based on Deploy Empathy's principles, I notice some areas where the interview could have been improved:

AREAS FOR IMPROVEMENT:

1. The interview seems to have included explanations about Milled's business model and pricing changes. According to Deploy Empathy, we should avoid explaining things during interviews as it breaks the trust bubble.

2. We don't see many instances of digging deeper into "why" - for example, when she mentioned using Chrome bookmarks, we could have asked "Can you walk me through the last time you saved something to your bookmarks?"

3. It seems like feature feedback was directly solicited (about the email map feature). Deploy Empathy suggests letting feature requests emerge naturally rather than asking about specific features.

To improve future interviews, I would recommend:

- Focus more on asking about specific instances ("Tell me about the last time you...")

- Avoid explaining features or business decisions

- Use more follow-up questions when interesting processes are mentioned

- Let the user guide more of the conversation rather than asking about specific features

Again, overall, the advice is mostly correct, especially the last four points. But there are a couple of issues.

For example, I’d agree with Claude that spending a lot of time explaining the business model probably isn’t ideal, but its reasoning for doing so is from an entirely different, unrelated context. The not explaining advice is for situations where one might get defensive of why, for example, a particular feature was designed in a way that doesn’t make sense to the user.

“According to Deploy Empathy, we should avoid explaining things during interviews as it breaks the trust bubble.”

Secondly, it misunderstands the discussion of feature requests.

It seems like feature feedback was directly solicited (about the email map feature). Deploy Empathy suggests letting feature requests emerge naturally rather than asking about specific features.

If you’re looking for feedback on a particular feature, it’s fine to ask about it—heck, that’s sometimes the entire purpose of usability interviews! There’s a difference between asking “what features should we add?” (bad) and “Can we talk about how you used [this specific feature]…”

But again, overall, it does a decent job as an interviewing coach. Certainly better than me since I didn’t provide any direction in the book on that topic.

I’m now planning to add a chapter on self-reflection and improvement as an interviewer. For me, I’ve found it helpful to listen back to my own interviews, and to get feedback from co-workers… but if you’re a founder, you might not have the time or coworkers for that.

I’d love to hear from you about how you analyze your own performance in interviews and have helped yourself improve. Think back over the last few years—how have your interviewing skills changed since your first interviews after reading Deploy Empathy?

Pull out patterns and themes from interview transcripts

When I started interviewing, the first thing we would do after getting the human-transcribed transcripts was to break out a highlighter and start reading through the interviews for common patterns and themes. Often, each member of the project team would do this—so 2-3 of us reading each interview multiple times. I have many happy memories of sitting around with coworkers, our feet propped up on the desk with thick pile of transcripts in our laps and gently chewing the cap side of highlighter in intense focus, with each person periodically letting out a delighted “oooooh!” when they found an exciting commonality.

As delightful and informative as that was, it did take a lot of time. And since a core readership of my book is founders, I have no illusions that most people reading this don’t have that kind of time.

This is where uploading your interview transcripts to an LLM and asking it to find common patterns and themes can be helpful. I’ve seen several people do this, and it does a pretty good job at a first pass.

But I want to stress that while it can be helpful, you cannot delegate all of the analysis work to an LLM.

For example, here is what Claude said were the valuable insights from Chaz’s interview with a user:

Claude:

VALUABLE INSIGHTS CAPTURED:

- Her process involves planning content months in advance

- She uses Milled both for her own business and client work

- She has a clear pain point around organization (currently using browser bookmarks)

- The tool fits into a broader workflow with Canva and Klaviyo

Those might be accurate, but it isn’t particularly deep analysis. The LLM doesn’t have the full context on your business. Even if you’ve been working with an LLM for a while and it has a pretty decent understanding of your business, it will never have as good of an understanding of your business, the industry, or you and your team’s unique capabilities and skills that you will. Two people from two companies in the same industry could read five transcripts of the same interviews with users and walk away with entirely different takeaways and actions for their business.

I’d suggest reading the interview transcripts yourself, then uploading them to the LLM as a way to help refine your thinking. From there, you can expand/correct its analysis.

The ethics of uploading your transcripts to AI

One looming question with all of this is whether it’s ethical to upload your transcripts to an LLM. After all, the person you’re interviewing has consented to talk to you, but not to Claude or ChatGPT.

The issues around privacy and ethics of LLMs are far beyond the scope of my expertise and the subject of my book, but I also don’t think we should throw our hands up in the air and say “I guess it’s fine?”

So, here are a couple of ideas for how to manage this:

At a minimum: I’d suggest removing any identifying details about the person you’ve talked to. For example, if they state their full name at the beginning of the interview, consider removing that from the transcript and using their initials instead.

A best practice: If you’re getting signed written consent from the people you talk to, you could add consent to the information being processed through LLMs.

If your company has a policy around this, or has figured out a happy medium, I’d love to hear from you.

An aside: Writing a book with AI as a reader

As an aside, this brings up something that AI is making me think about as a writer and as I work on this expanded edition. When you bring up AI around writers, the primary concern is about the IP and copyright implications of LLMs, and I share those concerns. Yet a concern I haven’t heard from other writers is how LLMs might change how we write and who we write for.

If readers are going to be uploading the book to LLMs and asking it for advice based on the book, I need to not only think about what my readers will take from the book and whether it’s clear to them, but also whether it’s clear enough to Claude or ChatGPT for it to give advice that I’d consider good advice. But there might be nuance that a human can easily make sense of that an LLM takes too literally or doesn’t know how to interpret. I would hate for someone to upload the book to Claude, ask it for advice, and for it to give someone what I would consider be bad advice that is ostensibly from my work.

I was only thinking about human readers in the first version of the book, and interviewed 30 beta readers during the editing process. But this time, I need to think about the LLM reader, too.

Maybe this time, I should upload the book to Claude, and ask it for advice based on the book, and revise based on whether its advice is correct. Or maybe I should create a version of the book that’s just intended for LLMs, and gives them very explicit instructions on what to tell people. Or create a Custom GPT that I’ve spent hours training. I don’t know.

I haven’t quite figured this out yet, but I’m thinking about it. One of the tenets of user experience is that people are going to find a way to do what they want to do regardless of the designer’s well-hewed opinion, and that it’s more productive to support human behavior patterns rather than fight them.

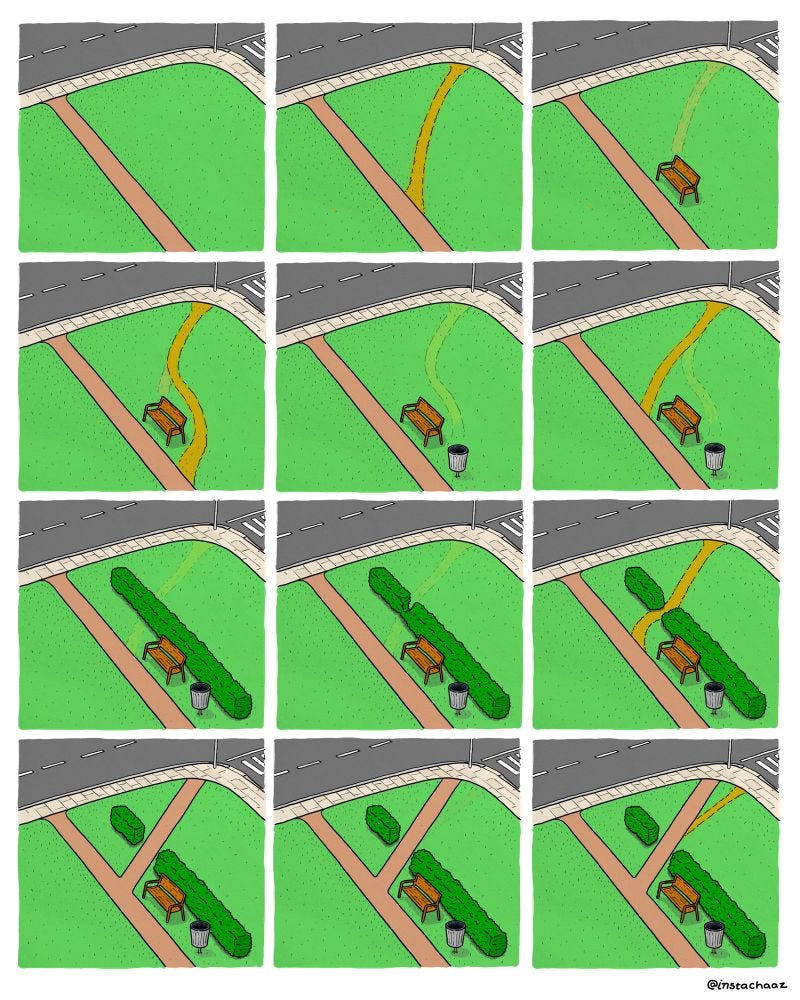

For example, take Desire Paths, as delightfully drawn by Chaz Hutton:

If people—you, my readers—are going to upload my book to ChatGPT anyway, I’m forced to think about how ChatGPT will read and interpret the book. The shortcut to use LLMs as a coworker is becoming hard-wired, and to act like it doesn’t exist would be a bit obstinate on my part.

Wanna chat?

To close this out for today, I want to note that I’m looking to talk to more people about how they’re using AI to augment their customer research. So please let me know if you’d be up for a chat sometime in March!

All the best,

Michele

One last thing: Do you live near Darmstadt, Germany? 🇩🇪

The Crafting Products Meetup will be hosting a customer interview workshop with in-person practice on February 17, and they'll be giving away a copy of my book. RSVP here.

loved it. i find HeyMarvin useful where I wrote tailored interview scripts using your guides. then I have my scripts, interview transcript, and good AI software to help me speed up customer research while still being in the weeds to experience it all. i wrote some posts last year where I made versions of them to feed to a customGPT. i agree with your point to figure out how to make it work with LLM. for example, author of "Founding sales" made a freemium chatbot with his book.